America Has a New AI Strategy. What’s Really in It?

The White House released a 28-page document called America’s AI Action Plan and like most governmental papers, I assume most people haven’t read it. I did something that I rarely do: I actually read the whole thing and then dug into some related documents like the Take It Down Act and other relevant Executive Orders. It basically wants to deregulate AI, roll it out everywhere (schools, government, defense, industry), and steer clear of anything ‘woke.’

So I read through it all and though I’m not going to pretend I understood all of its sections, I certainly do not know much about how the Department of Defense must adopt AI or how we can quickly restore American Semiconductor Manufacturing.

Still, I read it, and reread it, Googled and ChatGPTd the heck out of it, printed it, annotated on it. Have it on top of my PS Vita & ‘Meditations’ book. So yes, I think I’m entitled to my opinion. I could be wrong, but you should know my concerns and comments come from a place of love for the good of society as a whole and appreciation of technology. I shamelessly have been using AI quite often for over 2 years now.

Back to the plan, here’s what’s in it, what I think is missing, and why it matters if you’re a worker, a startup builder, a citizen who cares about speech and jobs, or just someone paying attention like I am.

The Action Plan

America’s AI Action Plan hits the usual talking points I hear constantly: how AI will “revolutionise industry” and “empower workers.” But this one adds something I don’t see as much: how it’ll “protect American values.” I think they meant eliminating references to DEI and Climate Change. Yeah, ok lol.

Ok, but who’s really in charge of America’s AI future? Is it Big Tech?

What’s in it? Big Promises

Though anecdotal I can say these LLM have been handy, especially ChatGPT, I think they’re highly oversold. The ones we most mortals have access to, regardless of how kind of a genius prompter you think you are, the AI LIES, hallucinates, makes up crap, has hiccups, sucks up to you. Until I ‘anecdotally’ see this change, I’ll continue singing that song of AI being over glorified. Fight me.

Anyways, the AI Action plan repeats a familiar line: “AI will improve the lives of Americans by complementing their work, not replacing it.”

I’d love to know who is tracking whether that’s true. Companies say it. The government says so. And yet, we all know people who are already worried about layoffs or being replaced by “efficiency tools.”

Two Things that Worry Me and One that Excites Me:

1. AI Will Be Deregulated – Fast

“The Federal government should not allow AI-related Federal funding to be directed toward states with burdensome AI regulations.”

Awesome, this opens the door to rapid innovation, but punishes states trying to enforce ethical or safety boundaries. So what will it be? Define ‘burdensome’.

2. Free Speech Over Facts?

“Revise the NIST AI Risk Management Framework to eliminate references to misinformation, Diversity, Equity, and Inclusion, and climate change.”

No WOKE AI, Grok, you’re saved. Ok, the plan strips AI guidelines of anything the administration views as ideological. That means what’s “true” in AI outputs could shift dramatically depending on who’s in power. Oh right, like most serious regulated things in this country. What’s to be American changes per administration too. Are we doomed? The cynic in me wants to tell you, humanity has always been, but we shall never give up. It’s in our nature.

3. Open-Source Models Will Get a Boost

“Open-weight AI models… have geostrategic value.”

This is good news for independent developers and researchers, but keeping it real, it’s also America’s latest move to make our tech the global default. The world is pretty used to this playbook by now. ¿Pero por qué no los dos? We get to maintain tech dominance AND actually help small players compete against the big guys.

Right now, the most powerful AI models are “closed,” companies like Google and OpenAI control them completely. This push could change that, giving startups and researchers actual access without paying massive fees to tech giants.

Whether we like it or not, if we don’t create the open standards, China or Europe might. It levels the playing field at home while keeping America in the driver’s seat globally.

The Gaps

I can definitively tell the focus has been fully tech, for corporations, government, military, etc. How about those who are ignored or sidelined such as:

- No real mention of artists, writers, disabled users, or independent media

- “Bias” and “truth” aren’t clearly defined. Who decides what’s neutral? What’s the current government’s definition of ‘woke’? Anything DEI related?

- No protection for workers in fields likely to be disrupted

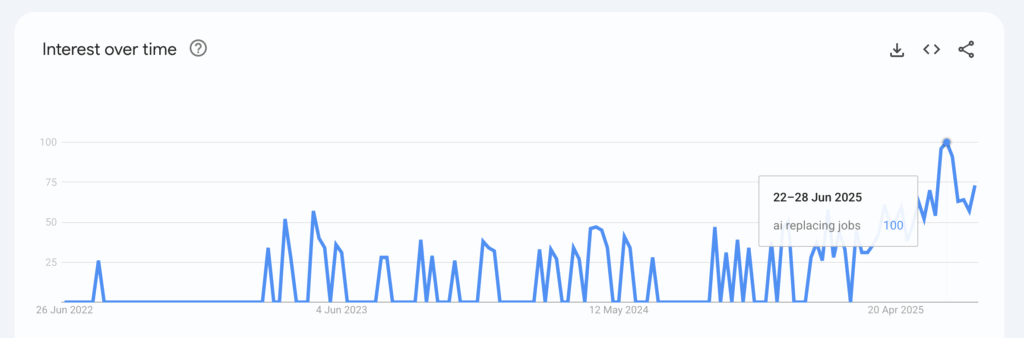

Google searches for ‘AI replacing jobs’ have spiked repeatedly since 2023, hitting their highest point in 2025. It’s clear Americans are paying attention to this fear, even as no laws have addressed it yet. Europe went the opposite way with the EU AI Act, and I have my own concerns with that approach, but the anxiety here at home is undeniable.

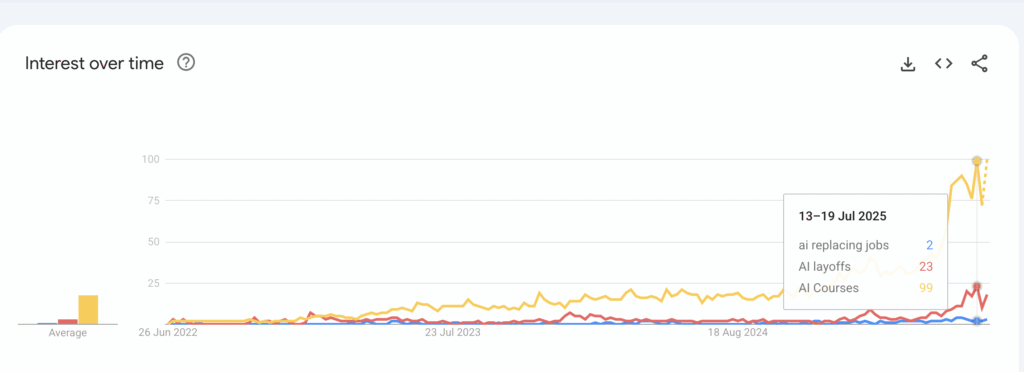

Based on this tiny find, ok, Americans aren’t just worried, they’re preparing. Google searches for ‘AI courses’ exploded in 2025, while searches for ‘AI layoffs’ rose and ‘AI replacing jobs’ barely moved. People are watching the headlines and moving to protect themselves. I mean what else can one do when technology moves faster than anything at this point. Almost like a virus.

I can’t speak about AI tools affecting jobs without talking about my own work. I’m a digital marketer and I’ve seen a few shifts and I am not going to pretend how some AI integrations make my life easier, but as we automate and generate results faster, slowing down is still such an integral part and I think many will ignore this, because nobody has time to trim the roses. The roses smell good amirite?

So yes, I can pivot if my job changes, but public policy? Not so much. Biden’s executive order tried to put some guardrails on AI, but an executive order is just that; a short‑term patch. It disappeared the moment the White House changed hands.

Bye Biden’s AI Executive Order

And here’s the thing: when Trump’s administration rescinded Biden’s AI executive order, we didn’t just shift priorities, we dropped the few real public protections we had:

- No more mandatory safety testing for high-risk models.

- No deepfake labeling requirements for AI-generated media.

- No civil rights guidance for AI in hiring, housing, or lending.

Now we have a plan that moves fast, deregulates, and promises jobs will magically be fine. But without tracking or public accountability, it feels like we’re flying blind, so I like the idea of the trip, but is the plane ready or at least being built? You can ride a moving car or jump in a moving plane, but you can’t take off with a plane that’s not ready to stay up in the air for more than a few minutes without expecting to crash soon.

With no comprehensive law from Congress, each president rebrands AI policy to fit their ideology. This leaves:

- Federal agencies chasing the current president’s mood

- Big Tech basically steering the U.S. AI in practice

- The people stuck in the middle, worried about jobs, safety, and bias

Again, who’s Actually in Charge of AI in the U.S.A.?

The action plan makes it sound like the president is leading the AI revolution, but executive orders are bandaid solutions.

Biden had the Executive Order 14110. Trump tore it up. The next president could do the same. Meanwhile:

- What is Congress doing? As far as I know there’s still no actual federal AI law covering jobs, deepfakes, privacy, or basic consumer protection. We have the Take It Down Act that touches on a very important issue, but I’m curious to see how it plays and if bad actors will take advantage of this, I mean it’s only human to take advantage of loop holes. We see it everywhere so this wouldn’t be any different.

- Big Tech moves faster than any lawmaker. If OpenAI, Google, or NVIDIA push a new tool tomorrow, our lives change long before Congress even holds a hearing. So we rely on Big Tech to not be unkind on the people. Government should do more.

- The market calls the shots, capitalism always wins. I get it. This plan is America first AI, but also Silicon Valley first AI. No red tape? It’s a party every day.

I look at Congress again, but then there’s so many battles so I understand AI is not a priority. Until a reaction to it is necessary, something terrible due to AI happening then we won’t have regulation at all. And trust me, removing red tape could benefit some things. My horror research brainstorming won’t get flagged for once. ChatGPT might finally stop thinking I’m a serial killer and let me ask the dark questions without flagging me.

When I finished the plan, I realized: our national AI strategy feels more like a press release than a plan. It depends on presidents and private companies, not stable laws or long-term oversight. So I guess I answered my own question. Who’s in charge?

What About Regular People Like Us?

If all this sounds distant, it’s not. This will hit our jobs, our creative freedom, and our communities long before it hits Wall Street. Darn it, it’s already here: Microsoft researchers have revealed the 40 jobs most exposed to AI—and even teachers make the list

You might be wondering the same thing I did:

- Should everyone learn AI tools now?

- I don’t know, did everyone learn how to use the Internet or to Google to carry on with their lives? I guess that gap closes the more the world goes into globalisation. I do see some folks proudly refusing to learn such tools due to either their jobs not requesting AI learning or whatnot. I try to adapt to things and always have a plan B and C when possible, so if you ask me “JC should I learn AI?” Unless you’re close to retirement and never plan to work in an office again, you probably might have to learn some AI basics.

- Or is it okay to ignore this because you hate the idea of it?

- This one I hear quite often “I hate everything and anything AI.” And I’m not going to be condescending, I get where this feeling comes from. I get it, the moment I say anything AI in some of my inner circles I can see the visceral reaction, the annoyance. But, no, even if right now your job or hobbies, the things and people you care for are not affected by AI. Hate to break it to you, it will or is getting affected one way or another. My grandparents have magically stayed away from the internet when it went mainstream, they were pretty much retired by the time the internet became a necessity. I see them via WhatsApp so that’s the use they give it to plus the news.

Did I convince you to think a bit more about AI today?

- You don’t have to love AI, but you should understand it enough to protect yourself at work.

- Treat it like digital literacy in the 2000s, you didn’t need to code, but you needed to know how email worked.

- How can we get involved?

- Paying attention to local and federal reps on AI.

- Pushing Congress for real worker protections and deepfake rules.

- Getting literate, not obsessed (don’t be me, sometimes I’ve lost it writing this on a Saturday in the middle of a gaming store while everyone plays Warhammer or Magic and yup, I’m on my laptop), learn just enough to not be left behind.

A bit of transparency: A little of how I’ve been using AI these days:

Personal projects: you bet I asked a human (husband and siblings to review it) and you bet I also asked ChatGPT to review it and help me with my layout and research and sometimes the sources were a hit and a miss, so time was wasted going down the rabbit hole of wrong links, like I said AI lies, sometimes and so subtle that you won’t be able to tell, ALWAYS BE FACT-CHECKING.

Spreadsheets are my forte and my weakness too, depending on what I’m supposed to do with it, but when manual work is required, it really is my weakness ChatGPT’s latest Agent has helped me with grouping, saving time thanks to a tip from my husband. But then, I still have to double and triple check, it’s not a magic wand. We can pretend it’s magical, but I don’t play that game.

My Closing Thought

Reading this plan left me both excited and uneasy.. And uneasy. Excited because I think AI has the possibility to do incredible things. Uneasy because AI it is still very stupid and some take it as this perfect science which it’s far from. And uneasy because the people shaping its rollout aren’t the ones living with the consequences, we, the people, are.

If Congress keeps snoozing, this “national AI strategy” will stay a political document while Big Tech does whatever it wants, as usual. And the rest of us will be left reacting to the changes, instead of shaping them.

Did you read the full plan? Want to discuss? This document isn’t just a roadmap, it’s a signal. The future of AI in America looks like it will be fast, messy, and political. If we don’t speak up or even read what’s being passed, we don’t get to complain when we’re left behind.